In the previous post I started to write about how to set up your unit tests in the repository code. The title was (maybe a bit misleading) “Unit testing your Repositories”. So I had to promise to write an article about the side of the tests as well.

The Problem

As explained in the other post, we only want to test the non-trivial functions in our repositories. We also don’t want to depend on the current state of the database, because this is never sure. So we want to mock parts of our database. This is not the solution for everything, but in many cases it will save us. Look at my previous post for possible problems with this approach.

The Example

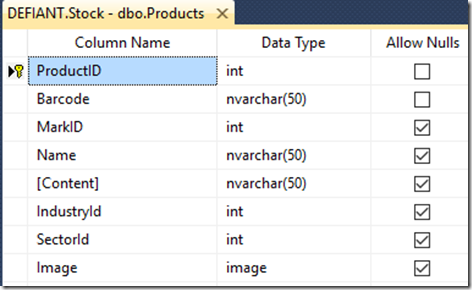

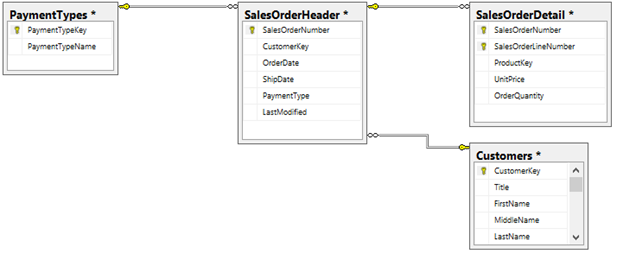

I have a real life example of a database containing sales orders. The database is not completely normalized and the sales order codes are actually a concatenation of a couple of fields. We want to write a function that will calculate the next code for a certain sales representative for a certain season. As you can imagine already, this will involve some string manipulation, conversions, etc.

Currently the code is written as a user function in the database, so we have to convert this to C# using Entity Framework 6 (or better). The following approach won’t work with older versions.

The T-SQL User Function

ALTER FUNCTION [dbo].[GetNextSalesOrderCode]

(

@Season nvarchar(3),

@Prefix nvarchar(3),

@RepPrefix nvarchar(2) — RepresentativePrefix

)

RETURNS nvarchar(50)

AS

BEGIN

DECLARE @Code as nvarchar(50)

DECLARE @PrevCode as nvarchar(50)

declare @MinSoCode as int

SELECT top 1 @MinSoCode = C.MinSoCode

FROM Computers C

INNER JOIN Representatives R ON C.Representative = R.Id

WHERE (R.Prefix = @RepPrefix) AND (C.ComputerName = N’ERP’)

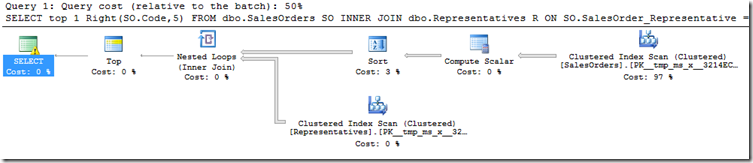

SELECT top 1 @PrevCode = Right(SO.Code,5)

FROM SalesOrders SO

INNER JOIN Representatives R ON SO.Representative = R.Id

where SUBSTRING(SO.Code,4,3)= @Season

and R.Prefix=@RepPrefix

and cast(Right(SO.Code,5) as int)>=@MinSoCode

order by Right(SO.Code,5) DESC

if @PrevCode is null

set @MinSoCode = 0

ELSE

set @MinSoCode = CONVERT(int, @PrevCode)+1

set @Code= @Prefix+‘.’+ @Season + ‘-‘ + @RepPrefix + FORMAT(@MinSoCode,‘00000’)

RETURN @Code

END

This function will in some way return the next sales order code, using non-trivial logic. The main problem is actually that the database isn’t completely normalized, which explains why we need in this case some more logic in our repository.

The repository code

public class SalesordersRepository : Repository, ISalesordersRepository

{

public async Task<string> GetNextSalesOrderCode(string season, string prefix, string representativePrefix)

{

Representative repr = await _db.Representatives.SingleAsync(r => r.Prefix == representativePrefix);

int rPrefix = repr.Id;

RepresentativeComputer comp = await _db.RepresentativeComputers.SingleAsync(c => c.RepresentativeComputer_Representative == rPrefix && c.ComputerName == “ERP”);

int minSoCode = comp.MinSoCode;

int prevCode = await GetPrevCode(season, rPrefix, minSoCode);

return $”{prefix}.{season}–{representativePrefix}{prevCode.ToString(“00000”)}“;

}

// Other methods

}

Because C# as a language is more powerful than SQL, we can write this function a bit more concise (and clear). It still contains enough logic to justify writing a test for it. We also use the function GetPrevCode but to keep things simple we keep this function out of scope. Of course testing it would be done in exactly the same way!

Testing

We follow all the known steps to create a test project, hook it up with the assembly under test, and write a test for the method. As a first attempt we just use the database in its current state. Of course this is bad for several reasons, but it’s a start anyway:

[TestMethod()]

public void GetNextSalesOrderCodeTest()

{

ISalesordersRepository repo = new SalesordersRepository();

string next = repo.GetNextSalesOrderCode(“151”, “S0”, “09”).Result;

System.Diagnostics.Debug.WriteLine(“next: “ + next);

Assert.AreEqual(next, “S0.151-0902001”);

}

We are lucky with one thing: the method doesn’t change the state of the database, so running this test will not have any side effects. But we do depend on the current state of the database, which can (will) be different when we run the test again later, and of course our unit test is not fast, fast, fast! The test code also depends on the connection string, which for DEV may be correct, but in the TEST environment probably not.

Mocking the Database

We want to mock our database, preferably not with too much code. Mocking the database means in this case mocking some known state in the concerned database tables, and then inject this “in-memory” database (SalesOrderEntities) in the repository. I have created a base class Repository that provides the means to inject a SalesOrderEntities implementation. By default it will use the database using EF, when testing we can inject the mocked database using the second constructor (if you want more info on this, see the other articles in my blog). I just give the class here without more explanation:

public class Repository : IDisposable

{

protected SalesOrdersEntities _db;

public Repository()

{

_db = new SalesOrdersEntities();

}

/// <summary>

/// Make DI possible for testing

/// </summary>

/// <param name=”db“></param>

public Repository(SalesOrdersEntities db)

{

_db = db;

}

public void Dispose()

{

if (_db != null)

_db.Dispose();

_db = null;

GC.SuppressFinalize(this);

}

~Repository()

{

Dispose();

}

}

All my repositories derive from this class, giving me always the possibility to inject a mocked database for testing.

Setting up for mocking

I like to use Moq as a mocking framework. There are many other mocking frameworks out there that are equally good, but I’m used to this one. So in my test project I install the Moq package:

Don’t forget to set the default project to your test project.

As all the repositories derive from the Repository class, it seems like a good idea to implement a RepositoryTests class that will set up all the common stuff. Like that we don’t repeat ourselves all the time. In this class we will set up the mock for the SalesOrderEntities, and some of the tables that it contains.

[TestClass]

public class RepositoryTests

{

protected static Mock<SalesOrdersEntities> _dbMock;

protected static Mock<DbSet<Representative>> _representativesMock;

protected static Mock<DbSet<RepresentativeComputer>> _representativeComputersMock;

protected static Mock<DbSet<SalesOrder>> _salesOrdersMock;

public static void Init()

{

SetupRepresentatives();

SetupSalesOrders();

_dbMock = new Mock<SalesOrdersEntities>();

_dbMock.Setup(db => db.Representatives).Returns(_representativesMock.Object);

_dbMock.Setup(db => db.RepresentativeComputers).Returns(_representativeComputersMock.Object);

_dbMock.Setup(db => db.SalesOrders).Returns(_salesOrdersMock.Object);

}

private static void SetupRepresentatives()

{

_representativesMock = new Mock<DbSet<Representative>>();

_representativesMock.Object.AddRange(new Representative[]

{

new Representative { Id = 1, Prefix=“1”},

new Representative { Id = 2, Prefix=“2”},

// other entities, left out for brevity

new Representative { Id = 105, Prefix=“15”},

});

_representativeComputersMock = new Mock<DbSet<RepresentativeComputer>>();

_representativeComputersMock.Object.AddRange(new RepresentativeComputer[]

{

new RepresentativeComputer { Id = 1, ComputerName=“ThnkPad”, MinSoCode=1, MaxSoCode=2000, RepresentativeComputer_Representative=9},

// other entities, left out for brevity

new RepresentativeComputer { Id = 19, ComputerName=“ERP”, MinSoCode=2001, MaxSoCode=4000, RepresentativeComputer_Representative=5},

});

}

private static void SetupSalesOrders()

{

_salesOrdersMock = new Mock<DbSet<SalesOrder>>();

_salesOrdersMock.Object.AddRange(new SalesOrder[]

{

new SalesOrder { Id=21910342, Code = “SO.151-0402009”, SalesOrder_Representative=4 },

// other entities, left out for brevity

new SalesOrder { Id=26183, Code = “SO.151-0402001”, SalesOrder_Representative=4 },

});

}

}

In the test base class I first declare 4 Mock objects. One to mock the SalesOrdersEntities and 3 other to mock the DbSets (the collections with entities). Then I create 2 methods to set up the Representatives (and their computers) and the sales orders. As you can see I’m adding the records hard-coded in these functions. This would involve a lot of typing without the help of our friend Excel.

Intermezzo: Using Excel to generate the code

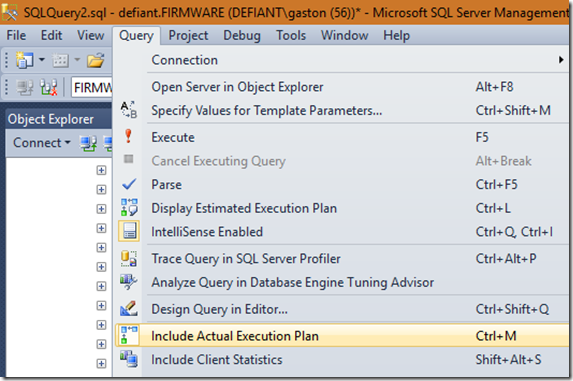

I used SQL Server Management Studio to obtain some records for each table. I then copied these records in an Excel spreadsheet and used a formula to generate the code to instantiate the entities. I only fill the fields that will be necessary now (YAGNI), but having it all in Excel would allow me to easily add more fields when needed. In the screenshots that you see here I removed all the data that could make this recognizable (privacy).

The column [New Object] contains the following formula:

=”new Representative { Id = ” & [@Id] & “, Prefix=”””&[@Prefix]&”””},”

As you can see I can easily add more rows if I want to, to execute more test scenarios. You may want to keep this spreadsheet in your source code control system and treat is like your other source code.

This isn’t rocket science, but it has helped me on several occasions  .

.

The modified test

[TestClass()]

public class SalesordersRepositoryTests : RepositoryTests

{

[ClassInitialize]

public static void Init(TestContext context)

{

RepositoryTests.Init();

}

[TestMethod()]

public void GetNextSalesOrderCodeTest()

{

ISalesordersRepository repo = new SalesordersRepository(_dbMock.Object);

string next = repo.GetNextSalesOrderCode(“151”, “S0”, “09”).Result;

System.Diagnostics.Debug.WriteLine(“next: “ + next);

Assert.AreEqual(next, “S0.151-0902001”);

}

}

2 Things have changed in this test class:

- I call the base class’ Init( ) method to initialize _dbMock.

- I pass _dbMock.Object in the repository constructor (DI).

So let’s run our test and see what happens. This should be good…

Bummer

Running the test gives an unexpected exception:

The problem is that the DbSet mocks don’t implement the IDbAsyncQueryProvider interface, which makes sense because we are not using a database here. So we need to find a workaround for this. In the repository we use the async / await pattern a lot, which depends on this interface.

Following the indicated link brought me to this great article: IQueryable doesn’t implement IDbAsyncEnumerable. I copied the code with the TestDbAsync classes into my project and referenced this in my mocks (as described in the article), so I won’t copy them in this post. I did change my test base class in the following ways:

Creating the InitializeMock<T> method

For each dataset to be mocked the following code must be executed:

var mockSet = new Mock<DbSet<Blog>>();

mockSet.As<IDbAsyncEnumerable<Blog>>()

.Setup(m => m.GetAsyncEnumerator())

.Returns(new TestDbAsyncEnumerator<Blog>(data.GetEnumerator()));

mockSet.As<IQueryable<Blog>>()

.Setup(m => m.Provider)

.Returns(new TestDbAsyncQueryProvider<Blog>(data.Provider));

mockSet.As<IQueryable<Blog>>().Setup(m => m.Expression).Returns(data.Expression);

mockSet.As<IQueryable<Blog>>().Setup(m => m.ElementType).Returns(data.ElementType);

mockSet.As<IQueryable<Blog>>().Setup(m => m.GetEnumerator()).Returns(data.GetEnumerator());

I created a generic method to prevent to copy / paste this code everywhere:

private static Mock<DbSet<T>> InitializeMock<T>(IQueryable<T> data) where T: class

{

var mockSet = new Mock<DbSet<T>>();

mockSet.As<IDbAsyncEnumerable<T>>()

.Setup(m => m.GetAsyncEnumerator())

.Returns(new TestDbAsyncEnumerator<T>(data.GetEnumerator()));

mockSet.As<IQueryable<T>>()

.Setup(m => m.Provider)

.Returns(new TestDbAsyncQueryProvider<T>(data.Provider));

mockSet.As<IQueryable<T>>().Setup(m => m.Expression).Returns(data.Expression);

mockSet.As<IQueryable<T>>().Setup(m => m.ElementType).Returns(data.ElementType);

mockSet.As<IQueryable<T>>().Setup(m => m.GetEnumerator()).Returns(data.GetEnumerator());

return mockSet;

}

This allows me to write the SetupXXX methods like this:

private static void SetupSalesOrders()

{

var data = new List<SalesOrder>

{

new SalesOrder { Id=21910342, Code = “SO.151-0402009”, SalesOrder_Representative=4 },

// other entities, left out for brevity

new SalesOrder { Id=26183, Code = “SO.151-0402001”, SalesOrder_Representative=4 },

}.AsQueryable<SalesOrder>();

_salesOrdersMock = InitializeMock<SalesOrder>(data);

}

The actual SalesOrdersRepositoryTests class remains unchanged. And in case you wondered: yes, my test turns green now.

Conclusion

Writing unit tests for repositories can be done. It requires some work but not as much as one would expect. With the help of Excel (or some other tool) you can generate the data in an easy way. I hope that I have given you a framework for your EF unit testing with this post.

I want to warn again that not everything can be tested using mocks, so you will need to run integration tests eventually. But if you can already fix a lot of bugs (and prevent them from coming back later) using some clever unit tests, then this is a quick win.

References

Testing with a mocking framework (EF6 onwards)

IQueryable doesn’t implement IDbAsyncEnumerable

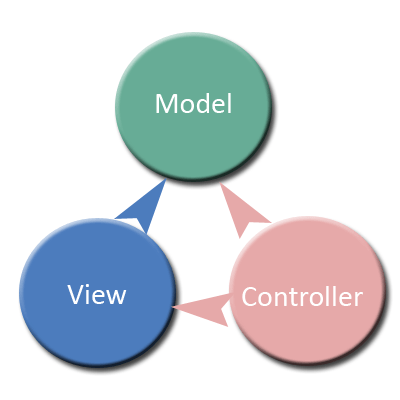

rn typically used for creating web applications. Of course it can be (and is) applied for other types of applications as well. In this article I only talk about APS.NET MVC.

rn typically used for creating web applications. Of course it can be (and is) applied for other types of applications as well. In this article I only talk about APS.NET MVC. I only give a short introduction, to make sure that we’re all on the same page.

I only give a short introduction, to make sure that we’re all on the same page.

Wikipedia has

Wikipedia has