Introduction

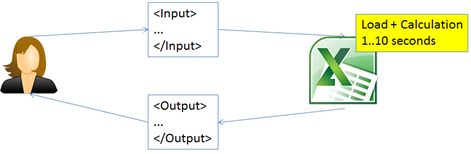

In my current project we let the users run simulations. Because of flexibility, the calculations are performed by Excel spreadsheets. There are many different spreadsheets available, for different kinds of simulations. When the calculations rules for one of the simulations change it is just a matter of modifying the corresponding Excel spreadsheet. So in the current workflow this is how things happen (simplified):

Some of the spreadsheets contain a lot of data. The advantage of this is that the spreadsheet is completely self-contained, and there is no dependency on a database. This also means that the spreadsheets can easily be tested by the domain specialists.

But as you can see in the picture, some of the spreadsheets turn very big. So loading the spreadsheet in memory can sometimes take up to 10 seconds. We are lucky enough (?) to not have too many users using the simulations simultaneously. It is not always possible to keep the all the different spreadsheets in memory as memory is still not unlimited.

In some situations it is necessary to recalculate simulations. This is done by a daily batch job. As you can imagine this job runs pretty long. And the users must wait until the next day to see the new results because the job only runs once per day.

As a practical remark: Most of the pictures below contain a link to a page with more explanations.

Problems

As we can see, the design is really flexible but it has its drawbacks:

In the user interface it can take some time for a simulation to finish. When not too many users are performing simulations at the same time, this is more or less acceptable. Once the load on the server becomes heavier the throughput is not sufficient.

This solution is not easily scalable. We can put several servers behind a load balancer, but the servers will require a lot of memory and CPU(s) to perform the calculations timely.

The spreadsheets have a limit on their size. I don’t know by heart the maximum size of an Excel spreadsheet, but I am sure that it will be far less than any database. Also a lookup in a database happens very fast (if you put the indexes right etc), whereas a VLookup (or an alike function) in Excel will plough through the data sequentially. So the solution is not very extensible.

When many users are performing calculations the server will be very charged, and at quite moments the server does nothing. But when the batch job starts, the server will be charged again. Luckily we have a timeframe during the night where the server is less used, so we can then run the batch jobs without disturbing our users.

Introducing a queue

To spread the load of the simulations better in time we can start by putting requests on a queue. Several clients can put their requests on the queue at a variable rate. The messages are processed by a worker at a consistent rate. As long as there are messages on the queue, the working will handle them one by one. So no processing time goes lost.

So now when a simulation needs to be recalculated, it can be put on the queue and handled in time. The lengthy batch job is not needed anymore.

Of course with this initial design we introduce new problems. It is clear that requests coming from the UI should be processed before the background messages (fka “the batch job”).

A second problem (that is out of scope for this post) is that we put a message on the queue in a “fire and forget” mode. We just count on the fact that the queue with its worker will handle the message eventually. For the background messages this is ok, but the requests coming from the UI must return a result. This can be done in several ways, one of the most obvious ways being a response queue. When the worker has finished a calculation the result is put on the response queue, which is handled by a working on the UI side. This design will require the use of correlation IDs to work properly.

Improving the throughput

The previous solution will improve the average throughput because the batch requests are handled together with the UI requests. But it may take a while to empty the queue.

So the “Competing Consumers Pattern” was invented.

The left side of the queue can still receive requests from multiple (types of) clients. On the right side there can be more than 1 worker processing the messages. More handlers mean that more work is done in a shorter period.

Depending on the queue depth (the number of messages that are waiting on the queue) more workers can be added or removed. This is what we call elasticity.

So now the average time that a message is on the queue will be shorter, and we don’t use more CPU than necessary. This can be important in cloud scenarios where you have to pay per cycle.

The problem that background requests are still mixed with UI requests remains, but they will be handled faster, and in a scalable way.

Giving some priorities

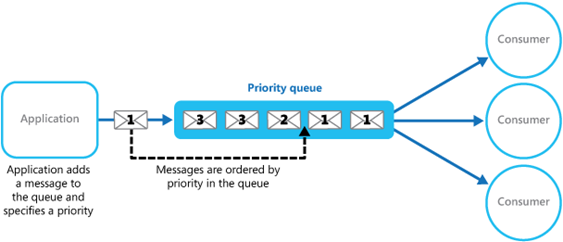

We want UI requests to be executed first. So ideally they are put first on the queue. This takes us right into the “Priority Queue Pattern.”

Each request will receive a priority. When a high priority request is placed on the queue, it will be executed before the lower level requests. So we put the UI requests on the queue with a high priority to make our users happy again.

This pattern can either be implemented with 1 queue handling the priorities, or by creating a high-priority queue and a low-priority queue. The high-priority queue can have more workers than the low-priority queue, saving CPU cycles on the low-priority queue again.

What about security?

We can create a small component (with its own endpoint) before the queue. This component can verify for each request if the user has the necessary rights to execute the request. We call this the “Gatekeeper Pattern”.

We can also validate the requests before they go on the queue (fire and forget), so we can give immediately a fault back to the client. We want to prevent exceptions in the workers, because this poses a problem: we can’t always report the error back to the client. Some solutions are to log the errors, or to create an error queue that can be handled elsewhere. This is also out of scope for this post.

Intermediate result 1

The solution that we have so far:

- On the left side we can have many (different) clients sending requests to the queue.

- Before the requests are handled, the gatekeeper verifies the permissions, and also the content of the message. This provides a fail fast mechanism and an initial feedback to the client.

- Then the request is put on the queue with a specific priority.

- The worker roles handle the messages in order of priority. When needed; more workers can be spawned to improve the throughput.

- Finally the results are stored. Possibly the results of the calculations are put on a response queue to be consumed by the client.

Further improving the throughput

Currently the simulation spreadsheet is self-containing. It contains all the necessary data to execute a simulation. Let’s say that one of the input parameters is a zip code, and that we want to look up a record for this zip code. This means that the spreadsheet now contains thousands of rows with zip codes and their associated, of which only 1 row is needed.

So we could pass the request to a dedicated queue that will enrich the request with the zip data and then pass it to a next queue to perform the rest of the calculations.

Maybe after the calculation is done we want to enrich the results (as an example, we may perform translations). Of course there is a pattern for this: the “Pipes and Filters Pattern“.

Example pipeline:

- Task A: enrich input parameters with data from the database (ex: lookups)

- Task B: perform the Excel simulations

- Task C: enrich the results (ex: translations)

There are some obvious advantages to this approach:

- The spreadsheet doesn’t need to contain the data for the lookups, so it becomes smaller. This means that it will load faster, its memory footprint will be less, and it will be more performant because the (sequential) lookups aren’t necessary anymore.

- The simulation becomes less complex. The domain experts can now concentrate on the problem at hand instead of performing all the lookups.

- Tasks A and C will probably be much faster than task B (the simulation itself). So we can assign more workers to task B to balance the workload.

Adding more queues to simplify the work

In the current design every request must be analyzed to see which type of simulation must be executed. It would be simpler to have a queue or a pipeline per type of simulation. This can be accomplished by the “Message Router Pattern“.

The first queue implements the Message Router. Based on the content of the request, he message is routed to one of the queues.

Each type of simulation gets its own queue, making the processing per simulation simpler. Of course more queues will be involved, and it may be a good idea to start drawing the solution now.

Intermediate Result 2

The flow now becomes:

- A client send a request to the Gatekeeper endpoint. If the request is allowed and valid, it is passed to the Message Router.

- The Message Router analyzes the content of the request, and sends it to the corresponding queue (Simulation 1, 2 or 3).

- The simulation queues are implemented as a pipeline where the input is enriched, the simulation is performed and the output is enriched. Finally the result is stored.

- Depending on the tasks to be performed in each queue one or more workers can be assigned. This makes the solution highly scalable.

There are some more advantages:

- Separation of Concerns. The implementation of each worker is simple because the whole workload is separated over multiple simple jobs.

- Monitoring. It is easy to see where messages are in the process. This is impossible in a monolithic implementation.

- Find the bottleneck. We only need to check the queue depths to find out where a possible bottleneck is. We can then assign more workers to this queue (or let Azure do this automatically).

Caching

Performing the simulation in one service made it very hard to cache the spreadsheets. The spreadsheets were big, and there are many types of simulations that would reside in one address space. Now we can load the spreadsheet in the worker role(s) where it is needed, resulting in the “Cache-Aside Pattern“.

The data for the lookups (enriching of the input parameters) can easily be kept in memory and the data for the translations as well.

Final Result

By separating all the workers it is easy to cache only the data that is needed. Client processes can be on different servers, and the worker processes as well. So we have effectively decoupled the clients from the workers. The throughput can be easily managed by playing with the number of workers, and the efficiency can be greatly enhanced by using caching.

In the end the implementation looks more complicated, but it is implemented in small, simple pieces that work together.

Conclusion

In this post I tried to take you through a couple of cloud design patterns. It is clear that this solution is very well suited to run in the cloud, because a lot of the functionality is already available. For example in Azure it is quite easy to set up the necessary queues, define the workers, and make it work.

There are many variations on this solution, each with its own advantages and drawbacks. So this is not THE solution to all problem. But it does show that we can create scalable, performant solutions by decoupling functionality using queue patterns.

If you have any ideas to add more patterns, or use different ones in this solution, feel free to use the comments section!